Project ideas#

Below, we give you some ideas for projects in Python for the last part of the course. These are meant to be an illustration of what one can achieve with programming.

However the best project is what you find useful: if you know a topic you want to learn the most, this course is the best time to get started. Consider something you want to do, formulate it as a project and share with us.

Organizing your work#

In order to make this experience most useful, follow these rules:

Don’t work alone, find yourself a group of people with who you are going to collaborate.

Plan ahead: don’t just start hacking; rather discuss how the project is going to work out, which parts it consists of, and what difficulties you would expect.

Use git to coordinate your work. For a small project it may seem like an overkill, but consider it a training experience.

think of how to organize your code so that it is reusable.

Add tests checking your code (more on this will come later).

Separate different parts of the project among different participants.

Read what your collaborators write, try to understand what is happening in that code, and give feedback.

Projects#

Extract information from text#

Make a module to extract information from some text stored in a string. Possible functionality could be:

count number of words/letters in a text.

count how many times a word accurs in a text.

find all occurences of a word in a text, and output the word together with it’s surrounding. For example, output 5 words before or after, or the whole sentence.

Be creative!

The text can be also provided through a filename, or a URL. Adapt your code to accept different sources of text.

Can you implement a spellcheck? Can you find libraries that can help you work with natural language?

Peak finder#

Make a module for searching and fitting for resonant peaks in noisy data.

Your procedure needs to be resistant against noise, think how you are going to find the peaks.

Implement generating mock signal so that you can test your procedure systematically.

How would you estimate the error in the fit results?

Does your procedure work if the points are not measured homogeneously?

What about finding several peaks (an amount not known in advance)?

Latex converter#

Implement a script that tracks all the latex files in one folder and compiles them into pdf files in another folder. If you work with some other document type that has to be processed (e.g. raw data that has to be automatically processed, you can also do that).

It should run persistently, so that when a new file is added, modified, or an old one is removed, the pdfs are automatically updated. In terminal compiling a latex file into pdf is done by just running pdflatex mydocument.tex.

In this project you’ll need to call terminal programs from Python. Watching for updates in a folder can be done in many ways, but the easiest is probably just to check for changes every couple of seconds.

Image compression#

Here you are going to use a singular value decomposition (SVD) and some image processing.

Images can easily be represented as numpy arrays in python (for example, using matplotlib.image.imread for loading PNG files).

A simple to implement compression scheme for this data is based on the singular value decomposition (SVD): A \(N\times N\)-matrix \(A\) can be decomposed as \(A = U S V^\dagger\) where \(U\) and \(V\) are \(N\times N\) unitary matrices, and \(S\) is a \(N\times N\) diagonal matrix with positive entries \(s_i\) on the diagonal.

If we take only the largest \(M\ll N\) entries \(s_i\), and set the remaining \(s_i\) to zero, we get an approximation for \(A\): \(A \approx \tilde{U} \tilde{S} \tilde{V}^\dagger\), where \(\tilde{U}\) and \(\tilde{V}\) are now \(N\times M\) matrices (the first \(M\) columns of \(U\) and \(V\)), and \(\tilde{S}\) a \(M\times M\) matrix with the largest \(s_i\) on the diagonal. But if \(M \ll N\), we now need much less information to approximately store the image, and hence we compressed it.

Write a module for compressing images, writing compressed images to a file, reading it again and displaying the image on the screen.

Analyse arXiv data#

Make use of arXiv api to do simple visualisations:

count how many publications with word novel in title (abstract) appears each day (month, or even year) and plot it. Compare with a word revisit. How often do these two appear together?

make histogram of lengths (amount of words) of abstracts from every paper where your supervisor is one of authors

Advice:

search for information about

feedparser.parse, it may be useful

Markov Chain Decrypter#

This project follows on from the day 1 decryption project.

The goal is to create a system that is able to decrypt substitution ciphers using Markov Chain Monte Carlo (MCMC).

A substition cipher works by making a permutation of the letters of the alphabet. e.g. A -> C, B -> F, C -> Z, .... As there are 26 letters in the alphabet, this means that there are \(26!\) possible keys, which is far too many to check by hand.

One way of breaking such a cipher is to analyze a reference text, and calculate how often different pairs of letters appear after each other (i.e. the frequency of the bigrams). The basic idea of this project is to use an english reference text to calculate the probability that an decryption key is correct, and then use this probability on the space of decryption keys to construct a markov chain that will “walk” towards the correct key.

Section 1 of this PDF contains a description of the algorithm. More technical details can be found in this masters thesis

Steps (more or less)#

Obtain a reference text (e.g. war and peace).

Write a function to to compute the relative probability of all bigrams from a text

Write a function that transforms a ciphertext to a cleartext using a given decryption key

Use the functions from steps 2 and 3 to write a function that calculates the probability that a given decryption key is correct, using the relative bigram frequencies of the reference text and the cleartext obtained from the given key.

Starting from a random decryption key, “walk” through the keyspace using the metropolis-hastings algorithm with the probability function you defined in step 4.

Generate a walk with N steps, and estimate the decryption key.

Things to explore (once you have working code)#

How does the “quality” of the reference text affect the convergence of the decrypter? Try using a shorter text, or an amalgamation of simple texts (e.g. children’s stories)

How does the “quality” of the ciphertext affect convergence? (if the ciphertext was 2 characters, would you expect the method to converge well?)

QASM compilation#

Simulate and visualise a circuit of quantum gates expressed in QASM

Read QASM instructions from a textfile (day3/QASM samples/test1.qasm)

Calculate the measurement outcome probabilites by multiplying process matrices

Simulate an experiment (binomial measurement outcomes)

Visualize your circuit (using latex)

Test if your code also runs on another qasm textfile (test 2- test n)

Write your own QASM circuit and simulate it.

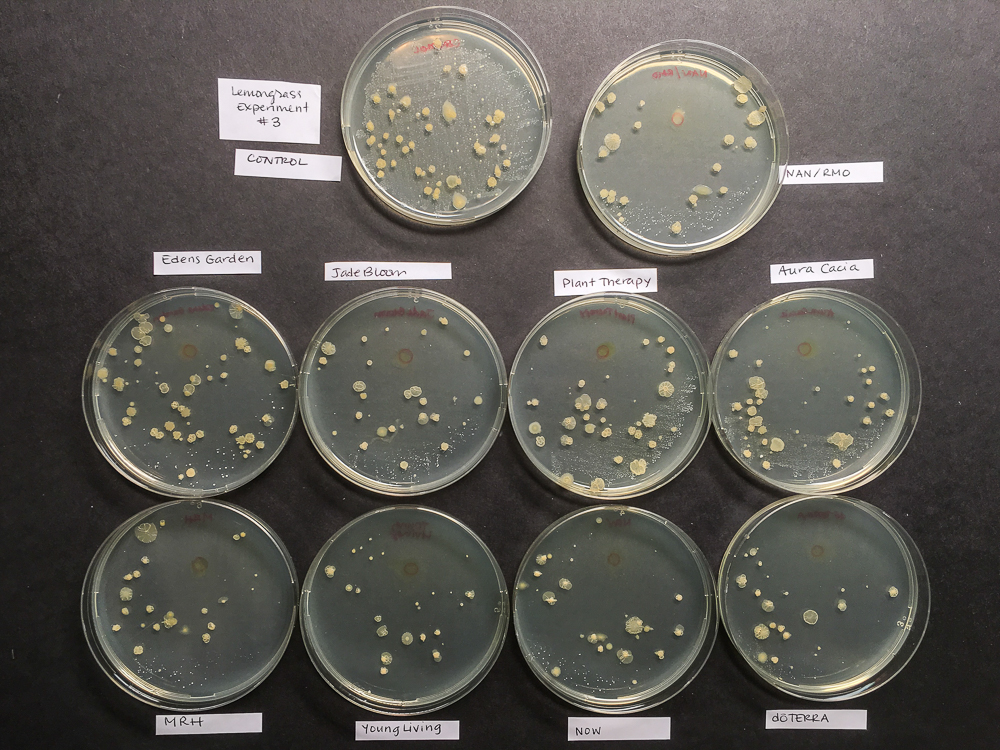

Bacterial colonies#

Implement your own tool for image recognition of bacterial colonies.

Use the image available in img/results_L3.jpg (included below) or any similar one you can find in google:

Convert the image to grayscale

Cut out individual petri dishes by adding a mask

Calculate a color histogram for your masked image

Calculate area filled with bacteria per petri dish